– Abul Kalam Azad Sulthan, Advocate, High Court of Judicature at Madras and Madurai Bench of Madras High Court & Partner, Spicy Law Firm.

I. Introduction

The rapid advancement of Artificial Intelligence (AI) technology has brought about a plethora of opportunities and challenges that were once confined to the realms of science fiction. As AI systems become increasingly sophisticated and integrated into various aspects of our lives, it is crucial to establish a robust legal framework to ensure their responsible development and deployment. This blog post delves into the intricate legal landscape surrounding AI, exploring the existing regulations, ethical considerations, and the pressing need for a comprehensive legal framework. By understanding the legal implications of AI, we can navigate this transformative technology while safeguarding fundamental rights, promoting innovation, and fostering trust in this ever-evolving field. Whether you are a technology enthusiast, a policymaker, or simply someone curious about the future of AI, this post aims to provide valuable insights and spark a thoughtful discussion on the legal framework that will shape the trajectory of AI’s impact on society.

A. Definition of Artificial Intelligence (AI)

Artificial Intelligence (AI) is a broad and evolving field that encompasses the development of intelligent systems capable of performing tasks that typically require human-like cognition and decision-making abilities. At its core, AI involves the creation of algorithms and computational models that can learn from data, recognize patterns, reason, and make predictions or decisions.

While there is no universally accepted definition of AI, it is generally understood as the simulation of human intelligence processes by machines, especially computer systems. This includes capabilities such as visual perception, speech recognition, natural language processing, problem-solving, learning, and decision-making. AI systems can be designed to operate autonomously or in collaboration with humans, leveraging vast amounts of data and computational power to augment and enhance human intelligence in various domains.

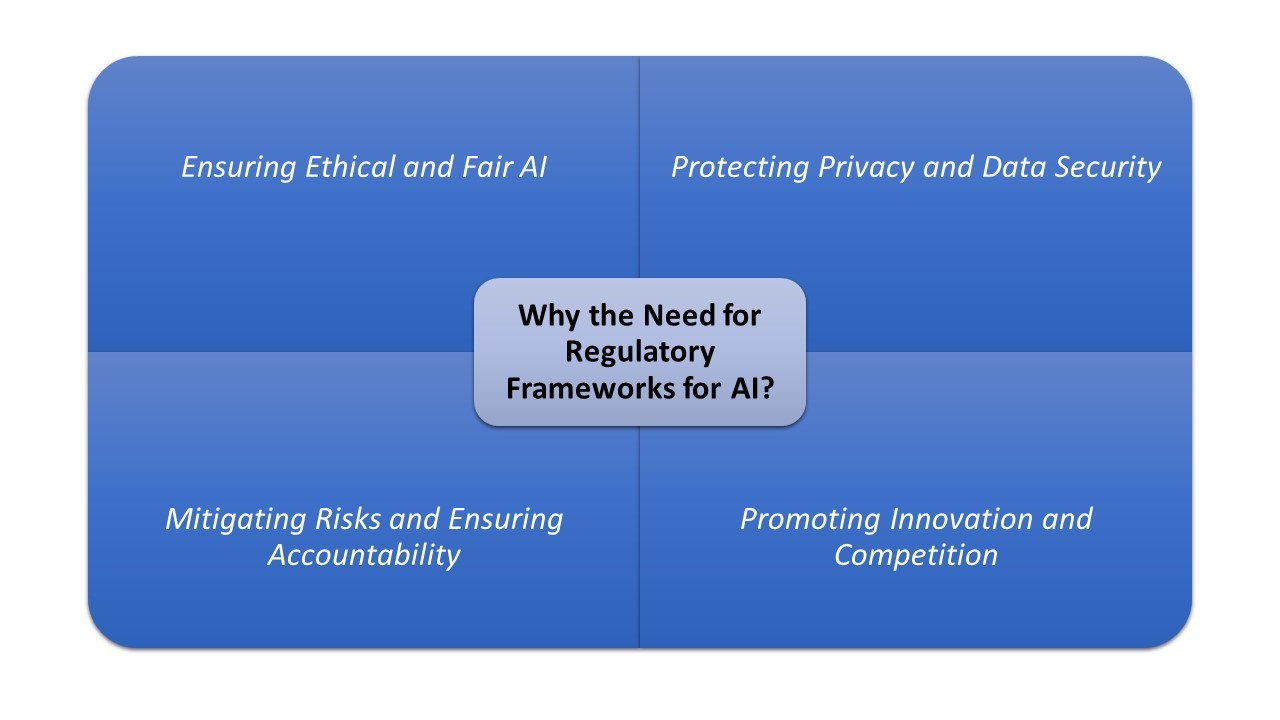

The need for regulation in AI to protect rights, promote ethical use, and ensure accountability.

The rapid advancement of artificial intelligence (AI) technologies has brought about immense potential for transformative change across various sectors, from healthcare and transportation to finance and education. However, this progress also raises critical concerns about the ethical implications and potential risks associated with the development and deployment of AI systems. As such, there is a pressing need for a robust regulatory framework to protect fundamental rights, promote ethical use, and ensure accountability.

The absence of clear guidelines and oversight mechanisms could lead to unintended consequences, such as violations of privacy, discrimination, and even threats to human safety. AI systems are increasingly being used in decision-making processes that directly impact individuals’ lives, from job applications to loan approvals and criminal sentencing. Without proper regulation, these systems could perpetuate biases and unfair practices, undermining the principles of equality and due process. Furthermore, the lack of transparency and accountability measures could result in situations where it is difficult to attribute responsibility for harmful outcomes caused by AI systems. A comprehensive regulatory framework is crucial to address these concerns, establishing clear ethical principles, standards, and mechanisms for oversight and redress.

Review of key laws related to AI (e.g., data protection, intellectual property).

The legal framework surrounding Artificial Intelligence (AI) is a complex and rapidly evolving landscape, with various laws and regulations coming into play. One of the key areas is data protection, as AI systems often rely on large amounts of data for training and operation. The General Data Protection Regulation (GDPR) in the European Union and similar laws in other jurisdictions impose strict rules on the collection, processing, and storage of personal data, which can significantly impact AI development and deployment.

Intellectual property rights are another critical aspect to consider. AI systems can generate outputs that may be subject to copyright, patent, or trade secret protection. The ownership and licensing of these AI-generated works can be a contentious issue, as existing intellectual property laws may not be well-suited to address the unique challenges posed by AI. Additionally, AI systems themselves may be patentable or subject to trade secret protection, raising questions about the scope and enforceability of such protections.

Examination of global initiatives and frameworks (e.g., UNESCO, EU AI Act).

The development and deployment of Artificial Intelligence (AI) systems have raised concerns about potential risks and challenges, prompting various global initiatives and frameworks to address these issues. One notable effort is the UNESCO Recommendation on the Ethics of Artificial Intelligence, which provides a comprehensive set of guidelines for the ethical development and use of AI systems. This recommendation emphasizes principles such as human rights, inclusiveness, transparency, and accountability, aiming to ensure that AI systems respect fundamental human values and promote the well-being of humanity.

Another significant initiative is the European Union’s proposed AI Act, which aims to establish a harmonized legal framework for AI systems across the EU. The AI Act introduces a risk-based approach, classifying AI systems into different risk categories and imposing corresponding regulatory requirements. High-risk AI systems, such as those used in critical sectors like healthcare or law enforcement, will be subject to stringent requirements for data governance, transparency, human oversight, and robustness testing. This framework seeks to strike a balance between fostering innovation and ensuring the safe and trustworthy development and deployment of AI systems within the EU.

A. Data Protection and Privacy – Analysis of laws like GDPR, CCPA, and their implications for AI.

The advent of Artificial Intelligence (AI) has ushered in a new era of data-driven innovation, but it has also raised significant concerns regarding data protection and privacy. Laws like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) have emerged as crucial legal frameworks aimed at safeguarding individuals’ personal information in the digital age.

The GDPR, implemented in 2018, is a comprehensive regulation that governs the collection, processing, and storage of personal data within the European Union (EU). It imposes stringent requirements on organizations, including obtaining explicit consent from individuals, implementing robust data protection measures, and ensuring transparency in data handling practices. The CCPA, which came into effect in 2020, grants California residents significant control over their personal information, including the right to access, delete, and opt-out of the sale of their data. Both regulations have far-reaching implications for AI systems that rely heavily on data collection and processing. Organizations developing AI solutions must ensure compliance with these laws to avoid hefty fines and maintain consumer trust.

B. Intellectual Property Rights – Exploration of ownership rights for AI-generated content and inventions.

Intellectual Property Rights (IPR) concerning AI-generated content and inventions is a complex and evolving area of law. As AI systems become more advanced and capable of creating original works or making inventive contributions, the question of who owns the resulting intellectual property becomes increasingly important.

Traditionally, intellectual property laws have been designed with human creators and inventors in mind. However, the rise of AI challenges these traditional frameworks. When an AI system generates content or makes an invention, it raises questions about whether the AI itself, the developers of the AI system, or the users of the AI should be considered the owners or authors of the resulting intellectual property. This issue has significant implications for various stakeholders, including technology companies, content creators, and inventors. Resolving these questions will require careful consideration of existing legal principles, potential policy implications, and the need to strike a balance between incentivizing innovation and protecting intellectual property rights.

C. Liability and Accountability – Discussion on legal liability in AI-related harms (e.g., autonomous vehicles).

Liability and accountability in the realm of artificial intelligence (AI) are crucial considerations, particularly in scenarios involving AI-related harms. The rapid advancement of AI technologies, such as autonomous vehicles, has brought forth complex legal challenges regarding the allocation of responsibility in the event of accidents or malfunctions.

One of the key discussions revolves around the potential liability of various parties involved in the development, deployment, and operation of AI systems. Manufacturers and developers of AI software and hardware may be held accountable for defects or negligence in the design or implementation of these systems. Additionally, the entities responsible for training and deploying AI models could face liability if their actions or omissions contribute to AI-related harms. Furthermore, the question of accountability extends to the end-users or operators of AI systems, as their actions or lack of proper oversight may also play a role in determining liability. Establishing clear legal frameworks and guidelines is crucial to ensure fair and equitable attribution of responsibility in AI-related incidents.

D. Ethical Considerations – Legal implications of bias, discrimination, and fairness in AI systems.

The development and deployment of AI systems raise significant ethical concerns regarding bias, discrimination, and fairness. These issues have far-reaching legal implications that must be carefully considered and addressed.

AI systems can perpetuate and amplify existing societal biases, leading to discriminatory outcomes that disproportionately impact certain groups based on factors such as race, gender, age, or socioeconomic status. For instance, if an AI system is trained on biased data or algorithms, it may exhibit unfair decision-making processes, leading to unequal treatment or denial of opportunities for certain individuals or communities. This not only raises ethical concerns but also potentially violates anti-discrimination laws and human rights principles. Governments and regulatory bodies must establish robust legal frameworks to mitigate these risks, ensuring that AI systems are developed and deployed in a manner that upholds principles of fairness, non-discrimination, and equal treatment under the law.

Sector-Specific Regulations

A. AI in Healthcare – Regulatory standards for AI applications in medical diagnostics and treatment.

Artificial Intelligence (AI) has the potential to revolutionize the healthcare industry by enhancing medical diagnostics and treatment. However, the integration of AI technology into medical practices raises critical ethical and regulatory concerns that must be addressed to ensure patient safety and data privacy. As AI systems become more advanced and capable of making complex decisions, it is crucial to establish robust regulatory standards to govern their development and deployment in healthcare settings.

Regulatory bodies, such as the Food and Drug Administration (FDA) in the United States and the European Medicines Agency (EMA), are actively working to develop guidelines and frameworks for the evaluation and approval of AI-based medical devices and software. These guidelines aim to ensure that AI applications in medical diagnostics and treatment meet rigorous standards for accuracy, reliability, and transparency. Additionally, they address issues related to data privacy, algorithmic bias, and the potential for AI systems to perpetuate or exacerbate existing healthcare disparities. By establishing clear regulatory standards, healthcare providers and patients can have confidence in the safety and efficacy of AI-powered medical technologies, while also promoting innovation and technological advancement in the field.

B. AI in Finance – Overview of regulations governing AI in financial services (e.g., algorithmic trading).

Artificial Intelligence (AI) has become an increasingly prevalent force in the financial services industry, particularly in areas such as algorithmic trading, risk management, and fraud detection. As AI systems continue to play a more significant role in decision-making processes, regulatory bodies have recognized the need to establish a robust legal framework to govern their use.

One notable example is the European Union’s Artificial Intelligence Act, which aims to create a harmonized regulatory framework for AI across the EU. The Act proposes a risk-based approach, classifying AI systems into different risk categories based on their intended use and potential impact. For high-risk AI applications, such as those used in financial services, the Act mandates stringent requirements, including robust risk management systems, human oversight, and transparency measures. Additionally, regulatory bodies like the Financial Conduct Authority (FCA) in the UK and the Securities and Exchange Commission (SEC) in the US have issued guidance and recommendations to ensure the responsible and ethical use of AI in financial services, particularly in areas like algorithmic trading, where AI systems can significantly impact market integrity and investor protection.

C. AI in Employment – Legal issues related to hiring algorithms and workplace surveillance technology.

The use of Artificial Intelligence (AI) in employment practices, particularly in hiring algorithms and workplace surveillance technology, has raised significant legal concerns. These technologies have the potential to perpetuate and amplify existing biases, leading to discriminatory hiring practices and infringing on employee privacy rights.

Hiring algorithms, which analyze vast amounts of data to identify and rank job candidates, can inadvertently discriminate against certain groups based on factors such as race, gender, or age. This can occur due to biases in the training data or the algorithms themselves. To mitigate these risks, companies must ensure that their AI systems comply with anti-discrimination laws and undergo rigorous testing for fairness and accuracy. Additionally, there is a need for transparency and accountability in the development and deployment of these algorithms, allowing for external audits and enabling candidates to understand the decision-making process. Regarding workplace surveillance technology, such as monitoring employee emails, computer activities, and even physical movements, there are concerns about privacy violations and the potential for misuse of collected data. Employers must strike a balance between legitimate business interests and respecting employee privacy rights, adhering to relevant data protection laws and implementing robust data governance policies.

Challenges in Creating a Legal Framework:

A. Rapid Technological Advancement – The lag between technology innovation and legislation.

The rapid pace of technological advancement, particularly in the field of Artificial Intelligence (AI), has outpaced the ability of legal frameworks to keep up. This lag between innovation and legislation poses significant challenges, as AI systems are being developed and deployed at an unprecedented rate, often without clear regulatory guidelines or oversight mechanisms in place.

As AI systems become more sophisticated and ubiquitous, the potential risks and implications for individuals, businesses, and society as a whole become increasingly complex. Issues such as data privacy, algorithmic bias, intellectual property rights, and liability for AI-driven decisions demand careful consideration and robust legal frameworks. However, the speed at which AI technology evolves often leaves policymakers and lawmakers playing catch-up, struggling to address emerging concerns before they become entrenched problems. This disconnect between the rapid evolution of AI and the slower pace of legal and regulatory processes highlights the urgent need for proactive, forward-thinking approaches to ensure responsible and ethical development and deployment of AI systems.

B. Jurisdictional Issues – Complications arising from cross-border data flows and varying national laws.

The cross-border nature of data flows and the varying national laws governing artificial intelligence (AI) pose significant challenges in establishing a harmonized legal framework. As AI systems often rely on data sourced from multiple jurisdictions, conflicting regulatory requirements can create ambiguities and legal uncertainties for organizations operating across borders.

Jurisdictional issues arise due to the divergent approaches taken by different countries in regulating AI. Some nations have implemented comprehensive AI governance frameworks, while others have adopted a more piecemeal approach or lack specific AI regulations altogether. This regulatory fragmentation can lead to situations where an AI system complies with the laws of one jurisdiction but potentially violates the laws of another. Additionally, the extraterritorial application of certain national laws further complicates matters, as organizations may be subject to the regulatory requirements of countries where they do not have a physical presence but process data from those jurisdictions.

C. Balancing Innovation and Regulation – The challenge of creating a regulatory environment that encourages innovation while protecting the public interest.

The development and deployment of Artificial Intelligence (AI) technologies present a unique challenge for policymakers and regulators. On one hand, AI has the potential to drive significant innovation and economic growth, with applications across various industries ranging from healthcare to finance to transportation. Encouraging the responsible development and adoption of AI could lead to breakthroughs that improve our quality of life and solve complex problems. However, on the other hand, AI also carries risks and ethical concerns, such as privacy violations, algorithmic bias, and potential misuse for malicious purposes.

Striking the right balance between promoting innovation and ensuring adequate safeguards is a delicate task. Overly restrictive regulations could stifle innovation and hinder the potential benefits of AI, while a lack of oversight could lead to unintended consequences and harm to individuals or society. Policymakers must carefully consider the implications of AI and craft a regulatory framework that fosters a responsible and ethical approach to its development and deployment. This may involve establishing guidelines for data privacy, transparency, and accountability, as well as addressing issues related to liability, safety, and the ethical use of AI systems. Ultimately, the goal should be to create a regulatory environment that encourages innovation while protecting the public interest and ensuring that AI is developed and used in a manner that aligns with societal values and human rights.

VI. Future Directions

A. Trends in AI Regulation – Emerging regulations and their potential impacts.

As the rapid advancement of Artificial Intelligence (AI) technology continues to reshape various industries and aspects of our daily lives, governments worldwide are grappling with the challenge of establishing appropriate regulatory frameworks. The emergence of AI regulation is a crucial trend that seeks to strike a balance between fostering innovation and mitigating potential risks associated with these powerful technologies.

One notable trend is the growing emphasis on ethical AI principles and guidelines. Several countries and international organizations have proposed frameworks that prioritize transparency, accountability, and the protection of fundamental human rights. These guidelines aim to ensure that AI systems are developed and deployed responsibly, minimizing the risks of bias, discrimination, and potential misuse. Additionally, there is a growing focus on data privacy and security, as AI systems often rely on vast amounts of personal data, raising concerns about data protection and individual privacy rights. Emerging regulations in this area aim to establish clear rules and safeguards to protect individuals’ data and prevent unauthorized access or misuse.

B. Importance of Stakeholder Engagement – The role of researchers, developers, policymakers, and the public in shaping legal frameworks.

As the rapid advancement of Artificial Intelligence (AI) technology continues to reshape various industries and aspects of our daily lives, governments worldwide are grappling with the challenge of establishing appropriate regulatory frameworks. The emergence of AI regulation is a crucial trend that seeks to strike a balance between fostering innovation and mitigating potential risks associated with these powerful technologies.

One notable trend is the growing emphasis on ethical AI principles and guidelines. Several countries and international organizations have proposed frameworks that prioritize transparency, accountability, and the protection of fundamental human rights. These guidelines aim to ensure that AI systems are developed and deployed responsibly, minimizing the risks of bias, discrimination, and potential misuse. Additionally, there is a growing focus on data privacy and security, as AI systems often rely on vast amounts of personal data, raising concerns about data protection and individual privacy rights. Emerging regulations in this area aim to establish clear rules and safeguards to protect individuals’ data and prevent unauthorized access or misuse.

C. Recommendations for Policymakers – Suggested approaches for creating adaptable and robust legal frameworks.

As the development and deployment of artificial intelligence (AI) systems continue to accelerate, it is crucial for policymakers to establish adaptable and robust legal frameworks. These frameworks should strike a balance between fostering innovation and addressing the potential risks and challenges posed by AI technologies. Here are some recommendations for policymakers:

First, policymakers should adopt a risk-based approach to AI regulation. This approach involves assessing the potential risks associated with different AI applications and tailoring the regulatory measures accordingly. Low-risk applications, such as those used for personal assistance or entertainment, may require minimal regulation, while high-risk applications, such as those used in healthcare, finance, or critical infrastructure, may necessitate more stringent oversight and safeguards. By adopting a risk-based approach, policymakers can ensure that regulations are proportionate and do not unnecessarily stifle innovation.

Second, policymakers should promote the development of ethical and trustworthy AI systems. This can be achieved by establishing clear guidelines and standards for AI development, deployment, and use. These guidelines should address issues such as transparency, accountability, fairness, and privacy protection. Additionally, policymakers should encourage the adoption of best practices and self-regulatory mechanisms within the AI industry, fostering a culture of responsible AI development and deployment.

Conclusion

Summary of Key Points – Recap the importance of a legal framework for AI.

The rapid advancements in artificial intelligence (AI) technology have brought about transformative changes across various sectors, from healthcare and finance to transportation and manufacturing. However, as AI systems become increasingly sophisticated and ubiquitous, it is crucial to establish a robust legal framework to ensure their responsible development and deployment. A comprehensive legal framework for AI serves as a safeguard against potential misuse, bias, and unintended consequences, while also promoting innovation and fostering public trust.

The importance of a legal framework for AI cannot be overstated. It provides a clear set of guidelines and regulations that govern the design, development, and implementation of AI systems. This framework ensures accountability, transparency, and adherence to ethical principles, such as privacy, fairness, and non-discrimination. Additionally, it establishes mechanisms for redress in cases of harm or violation of rights, protecting both individuals and organizations. By establishing clear boundaries and expectations, a legal framework for AI fosters an environment of trust, enabling the responsible adoption and growth of this transformative technology while mitigating potential risks and safeguarding fundamental human rights.

In conclusion, the rapid evolution of AI technology presents us with both unprecedented opportunities and significant challenges that we must address with urgency and foresight. A well-defined legal framework is not merely a bureaucratic necessity; it is essential for fostering innovation, protecting individual rights, and ensuring ethical standards in the deployment of AI systems. As stakeholders—including technologists, policymakers, and the general public—we must engage in open dialogues about the ethical implications and legal responsibilities that come hand in hand with this transformative technology. Together, we can shape laws and regulations that not only encourage progress but also instill public trust and accountability in AI. I encourage each of you to join this critical conversation, whether by educating yourselves further, advocating for responsible policies, or voicing your thoughts in community forums. Let us work collaboratively to ensure that AI serves humanity’s best interests now and in the future.

Leave A Comment